What do your Facebook posts, who you follow on Instagram and who you interact with the most on social media say about you? According to the tech startup Voyager Labs, that information could help police figure out if you have committed or plan to commit a crime.

Voyager Labs is one of dozens of US companies that have popped up in recent years with technology that purports to harness social media to help solve and predict crime.

Pulling information from every part of an individual’s various social media profiles, Voyager helps police investigate and surveil people by reconstructing their entire digital lives – public and private. By relying on artificial intelligence, the company claims, its software can decipher the meaning and significance of online human behavior, and can determine whether subjects have already committed a crime, may commit a crime or adhere to certain ideologies.

But new documents, obtained through public information requests by the Brennan Center, a non-profit organization, and shared with the Guardian, show that the assumptions the software relies on to draw those conclusions may run afoul of first amendment protections. In one case, Voyager indicated that it considered using an Instagram name that showed Arab pride or tweeting about Islam to be signs of a potential inclination toward extremism.

The documents also reveal Voyager promotes a variety of ethically questionable strategies to access user information, including enabling police to use fake personas to gain access to groups or private social media profiles.

Voyager, a nine-year-old startup registered as Bionic 8 Analytics with offices in Israel, Washington, New York and elsewhere, is a small fish in a big pond that includes companies like Palantir and Media Sonar. The Los Angeles police department trialed Voyager software in 2019, the Brennan Center documents show, and engaged in a lengthy back-and-forth with the company about a permanent contract.

But experts say Voyager’s products are emblematic of a broader ecosystem of tech players answering law enforcement’s calls for advanced tools to expand their policing capabilities.

For police, the appeal of such tools is clear: use technology to automatically and quickly see connections that might take officers much longer to uncover, or to detect unnoticed behaviors or leads that a human might not pick up on because of lack of sophistication or capacity. With immense pressure on departments to keep crime rates low and prevent attacks, using technology to be able to make fast and efficient law enforcement decisions is an attractive value proposition. New and existing documents show the LAPD alone has worked or considered working with companies such as PredPol, MediaSonar, Geofeedia, Dataminr, and now Voyager.

But for the public, social media-informed policing can be a privacy nightmare that effectively criminalizes casual and at times protected behavior, experts who have reviewed the documents for the Guardian say.

As the Guardian previously reported, police departments are often unwilling to relinquish the use of those tools even in the face of public outcry and in spite of little proof it helps to reduce crime.

Experts also point out that companies like Voyager often use buzzwords such as “artificial intelligence” and “algorithms” to explain how they analyze and process information but provide little evidence that it works.

A Voyager spokesperson, Lital Carter Rosenne, said the company’s software was used by a wide range of clients to enable searches through databases but said that Voyager did not build those databases on its own or supply Voyager staffers to run its software.

“These are our clients’ responsibilities and decisions, in which Voyager has no involvement at all,” Rosenne said in an email. “As a company, we follow the laws of all the countries in which we do business. We also have confidence that those with whom we do business are law-abiding public and private organizations.”

“Voyager is a software company,” Rosenne said in answer to questions about how the technology works. “Our products are search and analytics engines that employ artificial intelligence and machine learning with explainability.”

Voyager did not respond to the detailed questions about who it has contracts with or how its software draws conclusions on a person’s support for specific ideologies.

LAPD declined to respond to a request for comment.

‘A guilt-by-association system’

The way Voyager and companies like it work is not complicated, the documents show. Voyager software hoovers up all the public information available on a person or topic – including posts, connections and even emojis – analyzes and indexes it and then, in some cases, cross-references it with non-public information.

Internal documents show the technology creates a topography of a person’s entire social media existence, specifically looking at users’ posts as well as their connections, and how strong each of those relationships are.

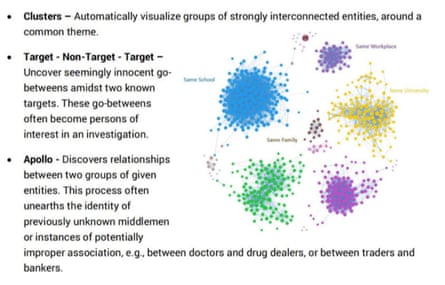

The software visualizes how a person’s direct connections are connected to each other, where all of those connections work, and any “indirect connections” (people with at least four mutual friends). Voyager also detects any indirect connections between a subject and other people the customer has previously searched for.

Voyager’s data collection is far reaching. If a person tracked by Voyager software deletes a friend or a post from their own profile, it remains archived in their Voyager profile. The system catalogues not only a subject’s contacts, but also any content or media those contacts posted, including status updates, pictures and geotags. And it draws in second- and third-degree friendships to “unearth previously unknown middlemen or instances of improper association”.

Meredith Broussard, a New York University data journalism professor and author of Artificial Unintelligence: How Computers Misunderstand the World, said it appeared Voyager’s algorithms were making assessments about people based on their online activity and networks, using a process that resembled online ad targeting.

Ad targeting systems place people in “affinity groups”, determining who is most likely to be interested in buying a new car, for example, based on their friends and connections, Broussard explained: “So instead of grouping people into buckets like ‘pet owners’, what Voyager seems to be doing is putting people into ‘buckets’ of likely criminals.”

In the advertising context, many consumers have come to accept this kind of targeting, she said, but the stakes are much higher when it comes to policing.

“It’s a ‘guilt by association’ system,” she said, adding that this kind of algorithm was not particularly sophisticated.

A focus on ‘those most engaged in their hearts’

Voyager software applies a similar process to Facebook groups, pages and events – both public and closed – cataloging recently published content and mapping out the most active users. Documents show the company also allows users to search for posts about specific topics, pulling up all mentions of that term, as well as the location tagged in those posts.

The company claims all of this information on individuals, groups and pages allows its software to conduct real-time “sentiment analysis” and find new leads when investigating “ideological solidarity”. In proposals to the LAPD, the company claimed its artificial intelligence platform was unmatched in its ability to analyze “human behavior indicators”.

Voyager claims its AI can provide insights such as an individual or group’s “social whereabouts”, can uncover hidden relationships and can perform a “sentiment analysis” to determine where someone stands ideologically on various topics, including extremism.

“We don’t just connect existing dots,” a Voyager promotional document read. “We create new dots. What seem like random and inconsequential interactions, behaviors or interests, suddenly become clear and comprehensible.”

A service the company calls VoyagerDiscover presents social profiles of people who “most fully identify with a stance or any given topic”. The company says the system takes into account personal involvement, emotional involvement, knowledge and calls to action, according to documents. Unlike other companies, Voyager claims it doesn’t need extra time to study and process online behavior and instead can make this type of judgment “on the fly”.

“This ability moves the discussion from those who are most engaged online to those most engaged in their hearts,” the documents read.

In one redacted case study Voyager presented to LAPD when it was pursuing a contract with the agency, the company examined the ways in which it would have analyzed the social media profile of Adam Alsahli, who was killed last year while attempting to attack the Corpus Christi naval base in Texas.

The company wrote that its software used artificial intelligence to examine whether subjects have ties to Islamic fundamentalism, and color coded these profiles as green, orange or red (orange and red seemingly indicating a proclivity toward extremism). “This provides a flag or indication for further vetting or investigation, before an incident has occurred, as part of an effort to put in place a ‘trip wire’ to indicate emerging threats,” the company wrote.

In Alsahli’s case, Voyager said, the company concluded his social media activity reflected “Islamic fundamentalism and extremism” and suggested investigators could further reviews Alsahli’s accounts to “determine the strength and nature of his direct and indirect connections to other Persons of Interest”.

But the documents show that many aspects of what Voyager pointed out as tripwires or signals of fundamentalism could also qualify as free speech or other protected activity. Voyager, for instance, said 29 of Alsahli’s 31 Facebook posts were pictures with Islamic themes and that one of Alsahli’s Instagram account handles, which was redacted in the documents, reflected “his pride in and identification with his Arab heritage”.

When examining the list of accounts he followed and who followed him, Voyager said that “most are in Arabic” – one of the 100 languages the company said it can automatically translate – and “generally appear” to be accounts posting religious content. On his Twitter account, Voyager wrote, Alsahli mostly tweeted about Islam.

The documents also implicated Alsahli’s connections, writing that three Facebook users he shared posts with could “have had other interactions with him outside social media, or been connecting in the same Islamist circles and forums”.

Parts of the documents were redacted. However, the only visible mention of content that could be seen as explicitly tying Alsahli to fundamentalism consisted of tweets Voyager said he had posted in support of mujahideen.

Julie Mao, the deputy director of Just Futures, a legal support group for immigrants, said she worried the color-coded risk algorithm and Voyager’s choice to study this particular case showed potential bias.

“It’s always easy in hindsight to pick out someone who was violent and say ‘hey, tech works based on them,’” Mao said. “It’s incredibly opaque how Voyager arrived to this threat level (was it something more than expressing religious devotion?) and how many individuals receive similar threat levels based on innocuous conduct. So even by its own logic, it’s a flawed example of accuracy and could lead to over-policing and harassment.”

It’s “basically a stop and frisk tool for police”, Mao said.

Voyager’s claims that it used “cutting-edge AI-based technologies” such as “machine learning”, “cognitive computing”, and “combinatorial and statistical algorithms” were, in effect, just “word salad”, said Cathy O’Neil, a data scientist and CEO of Orcaa, a firm that audits algorithms. “They’re saying, ‘We use big math.’ It doesn’t actually say anything about what they’re doing.”

In fact, O’Neil said, companies like Voyager generally provided little evidence demonstrating their algorithms had the capabilities they claim. And often, she said, police departments did not require or ask for this kind of data, and companies would be unable to provide evidence if it were requested – because their claims are frequently hyperbolic and unfounded.

The problem with this kind of marketing, O’Neil added, was that it could provide cover for biased policing practices: “If they successfully get people to trust their algorithm, with zero evidence that it works, then it can be weaponized.”

Melina Abdullah, a Black Lives Matter LA co-founder, said she was disturbed to learn about the conclusions Voyager’s software had made about the online activity of Muslim users.

“As a Black Muslim, I’m concerned. I always know that my last name alone flags me differently than other folks, that I’m seen with heightened scrutiny, that there are assumptions made about ‘extremism’ because I’m Muslim,” she said, adding that the records left her with many unanswered questions: “Who have they been flagging and what are the justifications? … It sounds like everybody’s vulnerable to this.”

Fake friends and private messages

Relying on publicly available information, Voyager’s software cobbles together a fairly comprehensive and invasive picture of a person’s private life, the experts said. But the company supplements that data with non-public information it gains access to through two primary channels: warrants or subpoenas and what the company calls an “active persona”.

In the first case, Voyager tech sifts through vast swaths of data law enforcement gets through various types of warrants. Such information can include subjects’ private messages, their location information or the keywords they have searched for. Voyager catalogs and analyzes these often vast troves of user data – an undertaking LAPD officers wrote in emails they would appreciate help with – and cross-references it with social and geographic maps drawn up from public information.

For its Facebook-specific warrant service, Voyager software analyzes private messages to identify profiles subjects are communicating most frequently with. It then shows a subject’s public posts alongside these private messages to provide “valuable” context. “In numerous cases, its effectiveness has prompted our clients to request additional PDF warrant returns” from tech companies, the documents read. Voyager said it planned to roll out the same warrant-indexing capabilities for Instagram and Snap, which would include image processing capabilities.

John Hamasaki, a criminal defense lawyer and member of San Francisco’s police commission, said he had already had concerns about how judges grant law enforcement access to people’s private online accounts, especially Black and Latino defendants accused of being in gangs: “The degree to which private information is being seized, purportedly lawfully under search warrants, is just way over-broad.”

If police were additionally using software and algorithms to analyze the private data, it compounded the potential privacy and civil liberties violations, he said: “And what conclusions are they drawing from it, and what spin is an expert giving to it? Because ‘gang experts’ are notorious for coming to a conclusion that supports the prosecution.”

There is less detail about the second means through which Voyager software accesses non-public information: its premium service called “active persona”. The documents indicate customers can use what Voyager calls “avatars” to “collect and analyze information that is otherwise inaccessible” on select networks. Using the active persona feature, the company said, its software was able to access and analyze information from encrypted messaging app Telegram. A 2019 product roadmap also shows plans to roll out the “active persona” mechanism for WhatsApp groups, “meaning the user will have to provide the system with an avatar with access to the group from which he wishes to collect”. A timeline Voyager provided to the LAPD shows the company also had plans to introduce a feature that enabled “Instagram private profile collection”.

Experts say the “active persona” feature appears to be another name for fake profiles and an LAPD officer described the function in an email with Voyager as the ability to “log in with fake accounts that are already friended with the target subject and pulling data”. While police departments across the country have increasingly used fake social media profiles to conduct investigations, the practice may violate Facebook and other platforms’ community standards.

Facebook rules require people to use “the name they go by in everyday life”. The company removes or temporarily restricts accounts that “compromise the security of other accounts” or try to impersonate others. In 2018 police in Memphis, Tennessee, used a fake account under the name Bob Smith to befriend and gather information on activists. In response, Facebook deactivated the account and others like it and told the police department it needed to “cease all activities on Facebook that involve the use of fake accounts or impersonation of others.” Facebook said everyone, including law enforcement, was required to use their real names on their profiles.

“As stated in our terms of services, misrepresentations and impersonations are not allowed on our services and we take action when we find violating activity, ” a Facebook spokesperson, Sally Aldous, said in a statement.

The feature also posed privacy and ethical questions, experts said. “I worry about how low the threshold is for tech companies explicitly enabling police surveillance,” said Chris Gilliard, a professor at Macomb Community College and a research fellow at the Harvard Kennedy School’s Shorenstein Center.

“There’s a long history of law enforcement spying on activists – who are engaging in entirely legal activities – in efforts to intimidate people or disrupt movements. Because of this, the bar for when companies aid police surveillance should be really high.”