The 4 Definitions of Multicloud: Part 2 — Workflow Portability

Part 1: Data Portability

Part 2: Workflow Portability

Part 3: Workload Portability

Part 4: Traffic Portability

With the goal of fostering more productive discussions on this topic (and understanding which types of multicloud capabilities are worth pursuing), this series continues with a look at multicloud through the lens of workflow portability.

Workflow Portability

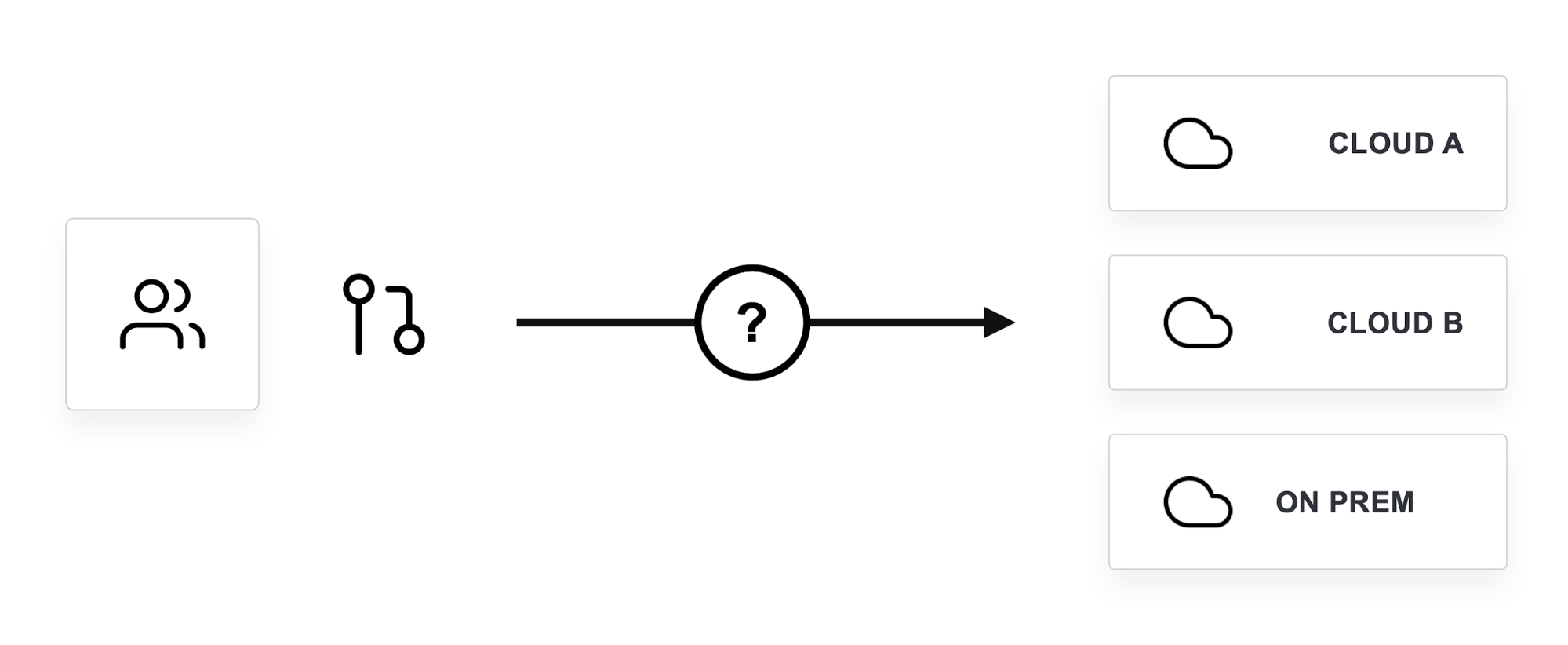

Multicloud workflow portability means having development and operations workflows that are compatible across multiple IT environments — whether they be cloud or on-premises. As a user of these portable workflows, you want to be able to use one toolchain, process and knowledge set to manage operations for applications running on Google Cloud, AWS, Azure and on-prem data centers. In other words — one workflow, run anywhere.

In fact, workflow portability already exists in both pre- and post-deployment tooling categories you’re already familiar with:

- Version control: GitHub, GitLab

- CI: Jenkins, CircleCI

- Package management: JFrog Artifactory, Sonatype Nexus

- App Deployment (orchestration, scheduling): Kubernetes, HashiCorp Nomad

- Observability & alerting: Datadog, PagerDuty

- Security & Secrets Management: HashiCorp Vault, AWS Secrets Manager

- Infrastructure Provisioning: HashiCorp Terraform, Red Hat Ansible

- Many other categories and tools

When your IT stack starts to splinter into multiple, heterogeneous environments, you have two potential solutions for fixing that fragmentation. Building a portable workflow is one of them. The other solution is migrating to one single tech stack — in regards to the cloud, that would mean one cloud vendor.

Multicloud vs. Single-Cloud

For pre-production workflows, workflow portability is the norm. GitHub or other version control and CI/CD systems are the central systems and common workflows for most software development today — and from there they can integrate with almost anything. Most of today’s challenges are due to the fragmentation of workflows that come after this, in the deployment and production space.

One way to fix this fragmentation is with a single-cloud native tech stack — which doesn’t make your workflow portable. As long as you never use another cloud for infrastructure, you won’t need portability. The other way is to use tools that work across multiple clouds.

When One Cloud Is Enough

Single-cloud is usually the right choice for a small company or a large company getting started in the cloud with a few projects. Platform-native service offerings should work seamlessly with each other during deployment, but think carefully about which services you choose and how tightly you lock yourself into using them.

If you are:

- Planning to grow into a large-scale company, or

- Think there’s a possibility of acquiring a company (or being acquired)

Then you should consider choosing technologies that have cross-cloud compatibility early on, because multicloud is probably in your future whether you want it or not.

When Multicloud Is Inevitable

For large companies like those in the Global 2000, multicloud isn’t much of a question anymore, it’s a reality. Therefore, one stack is not a realistic option.

Legacy Systems

You have to remember that most of the companies that make up the backbone of our world economy — banks, insurance companies, energy, healthcare and biotech — existed before the cloud did. You can imagine an IT organization is like a mature tree, with each generation of technology a new “ring” is added. Every generation of technology is still there, much like the inner rings of a tree. This can mean they’ve got an extensive physical footprint, possibly multiple data centers. Many firms won’t leave those investments behind and some are even investing further in on-prem.

Acquisitions

Acquisitions are key to the growth strategies for large enterprises. Potential acquisitions aren’t going to stop just because the two entities are not using the same cloud. On either side of an acquisition, the engineers may end up with new apps and infrastructure that are all-in on a different cloud platform.

Discounts

Large companies often end up with multimillion-dollar cloud budgets. When cloud vendors compete for that budget, companies often get attractive discounts or credits. Those provide a compelling economic incentive to distribute workloads between multiple providers.

Optimizations

Some might say that optimization of your apps as a justification for multicloud would be overkill. They might be right if optimization were the only reason, but usually, it’s not. It’s undeniable that each cloud vendor has areas of excellence — things that they are better at than the others. AWS has excellent primitives. Google has great data services. Alibaba has a significant presence in China. And you’ll see some companies that absolutely love Azure for a portion of their apps because so many older systems are built on Active Directory and Microsoft middleware services, and Azure makes it relatively easy to run those systems in the cloud compared to the other vendors.

Why Single-Cloud Doesn’t Usually Work for Large Companies

With the legacy systems, acquisitions and technological diversification at large companies, a migration of everything to one cloud is often not possible. There are a few reasons why, the main one being cost.

Cost

The time and energy required to migrate workloads to one cloud, multiplied by every workload needing to shift after something like an acquisition can be immense. As for legacy systems and company data centers, sometimes it’s just cheaper, safer and/or simpler legally to keep using that infrastructure. That’s not to say maintaining and integrating those legacy systems into newer systems doesn’t come without its own costs and complexities, but some of those complexities from integration can be abstracted away.

Speed

While some legacy systems and multicloud integration can slow down the speed of your applications and delivery, there are also strategies for improving speeds. However, the speed of integration can be wildly different compared to the time it takes to migrate everything to a single cloud. Integrating systems with consistent, portable workflows is almost always going to bring faster time to value for new acquisitions when compared to complete migration to a new cloud.

Scalability, Resilience, Observability, Manageability

The rest of the points of comparison for single-cloud versus multicloud vary depending on the multicloud tooling you choose. There are workflow portability solutions that can provide just as much scalability, resilience, observability and manageability in the post-deploy workflow as the cloud native equivalents you would use in a one-stack setup.

Workflow Portability vs. Fragmentation

So, there are many reasons why organizations probably can’t or won’t migrate everything to one cloud. Why not use the native tools for each cloud and have specialists that deeply understand their assigned cloud? Each team would be fluent in their environment’s unique services, tooling and syntax.

This is what’s known as a fragmented approach and it involves branching workflows for each cloud deployment.

Fragmentation’s Inherent Shortfalls

The fragmented approach gets complex very quickly. Imagine having to build specialist teams for two to three different clouds and then having different workflows for provisioning, service-based networking, credential and key management security and deployment orchestration/scheduling tasks. The costs of salaries and complexity will usually outweigh the likely small advantages of specialization.

The diagram below illustrates the fragmentation of cloud tooling well. Starting with static, dedicated infrastructure on the left and dynamic cloud and on-premises infrastructure on the right, you can see areas like provisioning are very cloud-specific. Each cloud platform has its own provisioning tool — CloudFormation for AWS, Azure Resource Manager and GCP Cloud Deployment Manager, for example — and they’re only compatible with their respective platforms. This means a multicloud company using those tools would not have workflow portability.

However, some of these tools work across different clouds. HashiCorp Terraform is in the private cloud column (and many other tools could go in that spot as well) but it is well known for being able to provision across all of the major public clouds as well. It’s cloud-agnostic and can act as a single provisioning platform for each cloud platform. This is a good example of a tool that enables multicloud workflow portability — specifically for a provisioning workflow.

Workflow Portability’s Comparative Strengths

If you approach each of the diagram’s categories with a single, portable workflow, you don’t need two to three specialist teams for each cloud. You only need one team for your one-workflow solution — and that might also help make it easier to include your on-prem infrastructure and legacy systems into a hybrid cloud workflow when compared against the cloud native workflows.

With Terraform again as an example, developers are given a common method for writing infrastructure as code to define resources — such as servers, databases and load balancers. They can then configure and provision those resources to almost any cloud or on-prem setup.

Being open source is another key component aiding workflow portability. In Terraform’s case, it gives third-party developers and companies the ability to create plugins so Terraform can provision their unique technology. For any services from the major cloud vendors, HashiCorp already maintains first-class plugins that are updated frequently to support new and updated services on day one.

Overall, when comparing a single workflow approach to a fragmented, multi-specialist approach, the cost, speed, scalability, observability and manageability factors all favor workflow portability. Resilience may get a slight edge with a multi-specialist approach, but probably not enough to make it worth the sacrifices in cost and other areas.

The Workflow Should Be Portable, but the Apps Don’t Have to Be

I don’t want to imply that you shouldn’t use any cloud vendor-native services. The advantage of multicloud is being able to run apps that are ideally suited for one cloud without giving up apps that might be ideal for another.

In part one of this series, I talked about data portability, which is a lot harder to figure out than workflow portability. You don’t need data portability and app portability to have workflow portability.

For example, if you wanted to build an app that leverages a database in AWS and another that leverages analysis on Google’s BigQuery, a provisioning workflow with Terraform could deploy to both of those services from the same interface. Here, we’re separating the delivery process from runtime dependencies. It makes sense that you won’t want to build or manage all of your own databases, caches, etc. — but realize that those services can pin your apps to that environment.

Enabling Workflow Portability

In my view, workflow portability is the most achievable and worthwhile implementation of multicloud portability to pursue.

Enabling workflow portability means choosing tools that are multicloud compatible. Even as a smaller single-cloud company, if you don’t optimize for workflow portability upfront, it can be really painful later. Think about the tools and services from cloud vendors that can lock you into workflows that only work for their platform. Don’t optimize multicloud in terms of using multiple clouds at first, but definitely optimize the workflow right away.

When Mitchell Hashimoto and I founded HashiCorp, we had a core set of technical principles we wanted to embed into every product we built — we even wrote a Tao about them. One of the key principles in that Tao was “workflows, not technologies.”

So as we built Terraform, Vault, Consul, Nomad and (more recently) Waypoint and Boundary, our goal has always been to create products that provide a common workflow across as many environments as possible. Whether it’s a hybrid or multicloud approach, our belief has always been that we need to meet companies where they are, rather than trying to push them all in toward a completely new, homogeneous environment.

The Other Definitions of Multicloud

As this series continues, read about the other three definitions of multicloud — data portability, workload portability and traffic portability — to understand the trade-offs and enablement patterns for each.