If you’ve landed on this page either:

- Google has gotten better at understanding that “increase” does not mean “improve” with regards to the query “Increase time to first byte” and has decided to rank this page instead of one of the many pages about reducing time-to-first-byte (TTFB).

- You are someone working in SEO and have seen a tweet mentioning this, or are subscribed to my email list.

Welcome.

Why Would Someone Want to Slow Down Their Website?

I’m writing this because I wanted to increase the ‘time-to-first-byte‘ (TTFB) on my website.

This isn’t something ordinary humans should ever want to do, but was a desire triggered in me by some work (see: my Google Search History).

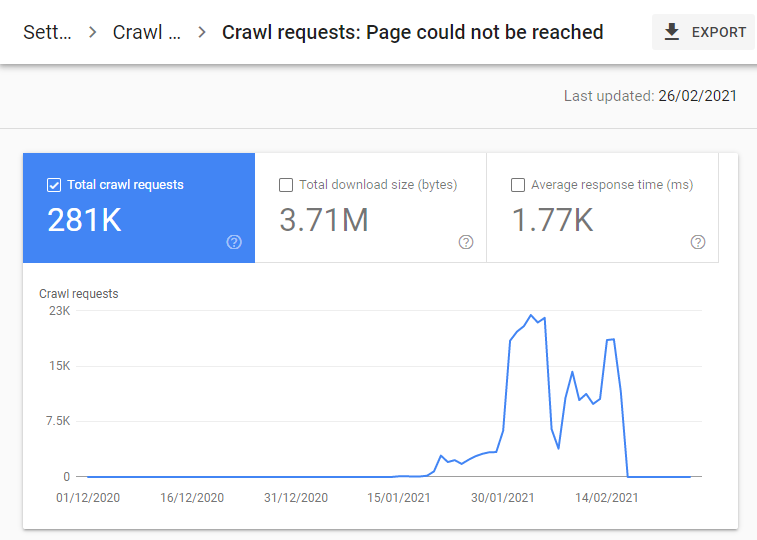

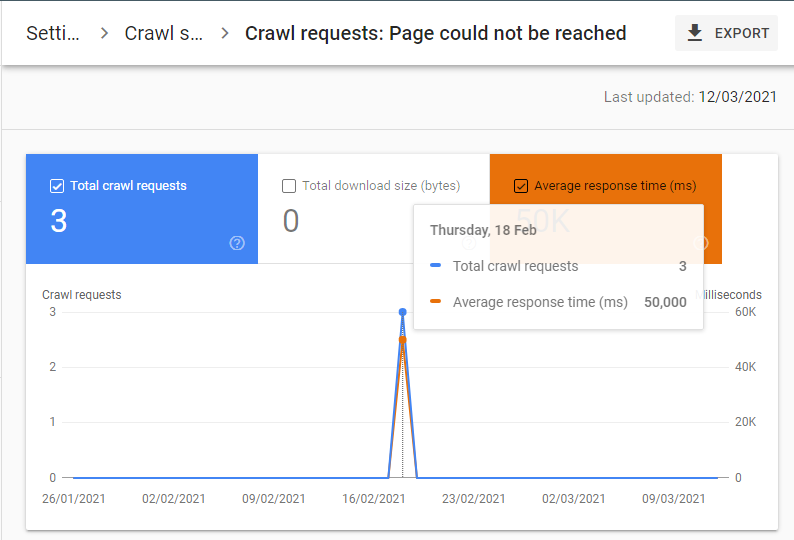

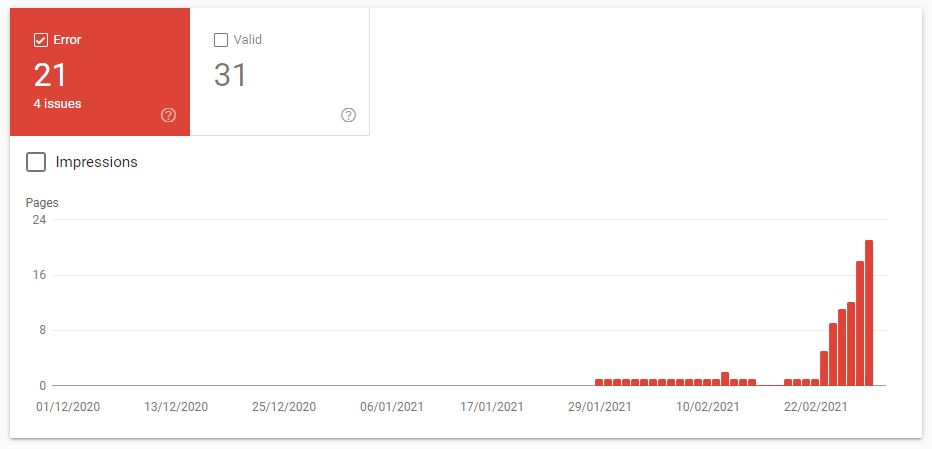

While investigating an issue for a client I experienced TTFB of >10 seconds for only Googlebot User-Agents (sometimes hitting >2 mins). This was paired with huge growth in “Page could not be reached” errors:

This isn’t a particularly common error. Looking up the definition of this issue:

Page could not be reached: Any other error in retrieving the page, where the request never reached the server. Because these requests never reached the server, these requests will not appear in your logs. –https://support.google.com/webmasters/answer/9679690?hl=en

This pairs nicely with another behaviour for that ‘slow death spiral’ we love:

If your site is responding slowly to requests, Googlebot will throttle back its requests to avoid overloading your server. Check the Crawl Stats report to see if your site has been responding more slowly.

I thought the excessively long TTFB (e.g. >10s) Googlebot was encountering might result in Google categorising the URL into the “page could not be reached” bucket.

The implication is that from Google’s perspective:

“We think this request didn’t reach the server because we waited ages and heard nothing back” == “Request didn’t reach the server“.

This is one of those areas of SEO where there isn’t much common knowledge, so any testing is likely to be fruitful either way.

If you have your own website (and don’t mind losing traffic), you have the liberty to test ideas you would never ever recommend to a client. And I wanted to see what happened if I increased the ‘time-to-first-byte‘ (TTFB) on my website.

How to Increase Time to First Byte

The easiest way to increase TTFB that I could think of was by introducing an artificial delay on an edge server before returning the response. There are other ways, but I lack the doggedness to ruin my WordPress setup even further.

As has been drummed into me by the community, SEO is all about the user. Human users should not have to suffer for my idiocy. So I only introduced this artificial delay to bots.

To do this, I created a Cloudflare Worker that added an artificial delay for any user with ‘bot’ in the User-Agent and set an HTTP Header that would remind me why the hell this was happening.

Here it is :

addEventListener('fetch', event => {

event.respondWith(handleRequest(event.request))

})

function sleep(ms) {

return new Promise(resolve => setTimeout(resolve, ms));

}

async function handleRequest(request) {

const init = {}

const userAgent= request.headers.get('User-Agent') || ''

let response = await fetch(request)

response = new Response(response.body, response)

if (userAgent.includes('bot')){

await sleep(15000)

response.headers.set('bigdelay', 'yes')

return response

}

else return response

}(I am good enough at copy+pasting to achieve this. I have no idea what I am doing but it works. Please don’t cyber-bully me)

This sets a 15 second delay for bots.

Note: I’ve increased the delay even further, so you’ll be waiting for ~100 seconds if you do want to try the below.

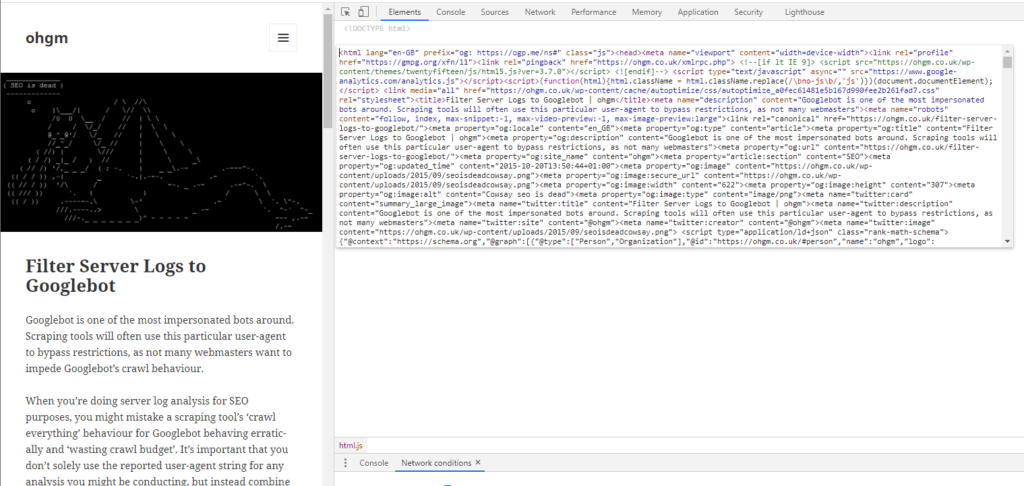

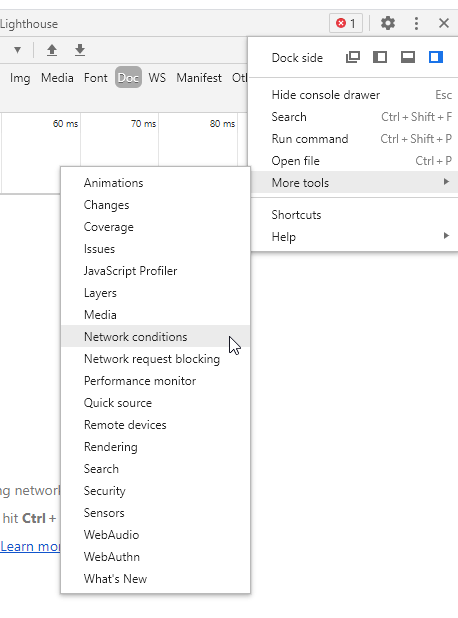

You can see how this feels in Chrome by:

- Opening a different post on this website in a new tab.

- Going into Developer Tools > More Tools > Network Conditions and selecting one of the Googlebots.

- Hard refreshing (CTRL+F5) the page.

You’ll notice that the page is slow.

Really, really slow.

The browser should take around 15 seconds to load the HTML…and another 15 seconds to load each asset that comes after that:

Wonderful.

Isn’t this Cloaking or something?

“Cloaking refers to the practice of presenting different content or URLs to human users and search engines.” – I’m prioritising human users, but am returning the exact same content to all user-agents.

(╯ ͠° ͟ʖ ͡°)╯┻━┻

What Happened?

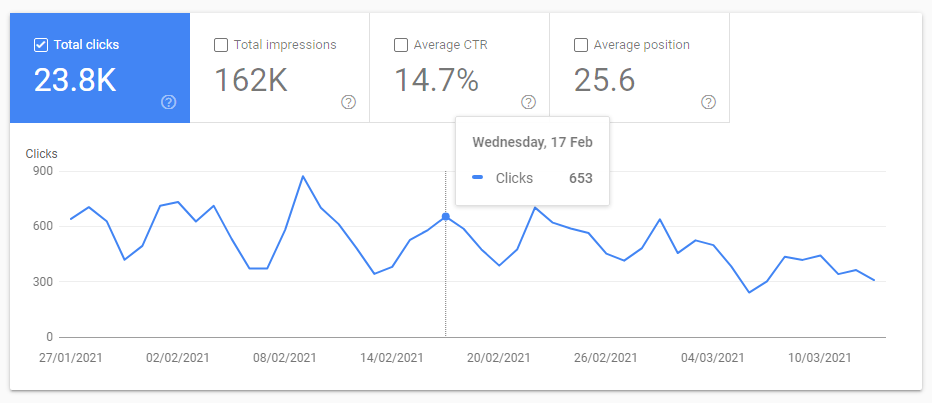

I introduced this delay Feb 17th 2021.

Q: What do you think happened when I introduced a severe delay for Googlebot?

Before you see the results, please use this opportunity to test your SEO instincts (I’m very fond of SearchPilot’s quizzes for this).

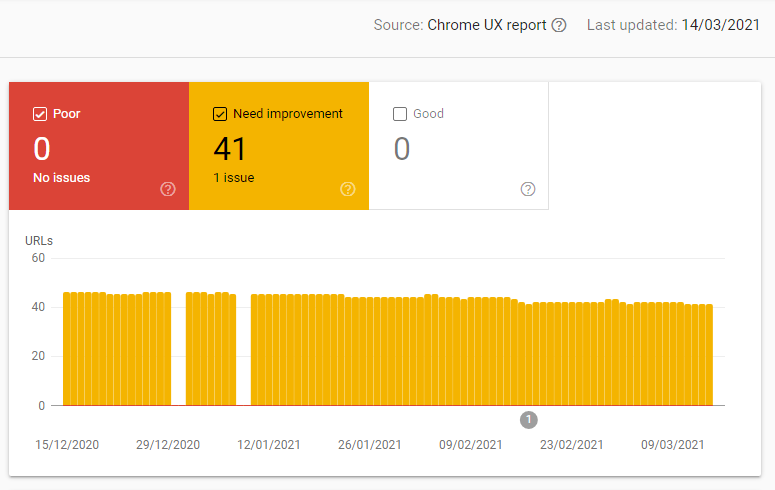

I’d start by showing you traffic, but I don’t want anyone to spoiler themselves for the above question. I’ll start with a dull one; Core Web Vitals:

This should make sense – the listed Source for CWV in Search Console is the Chrome UX report. It’s not fed by Googlebot data but actual users, and I didn’t throttle actual users.

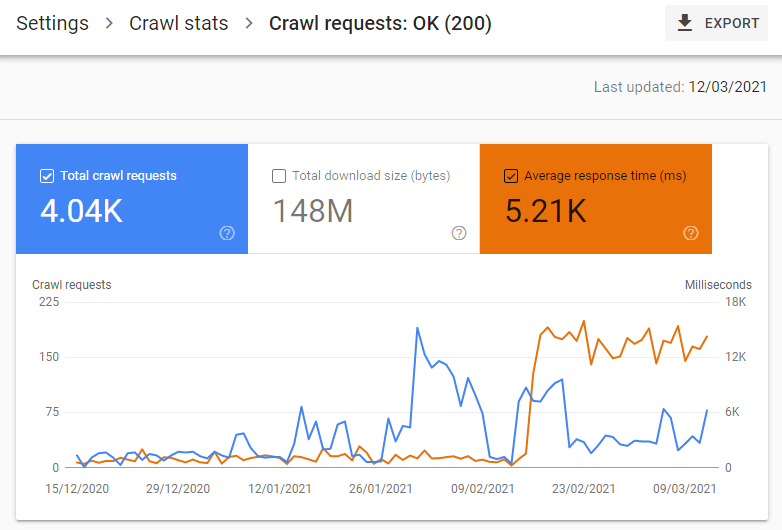

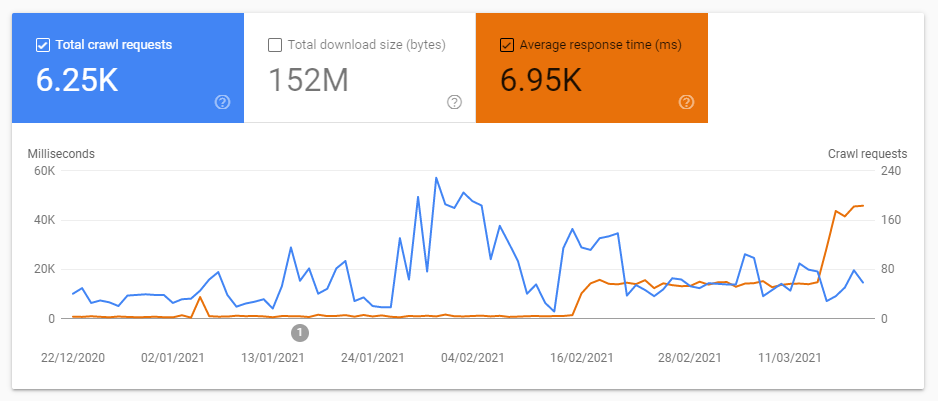

Crawl Rate:

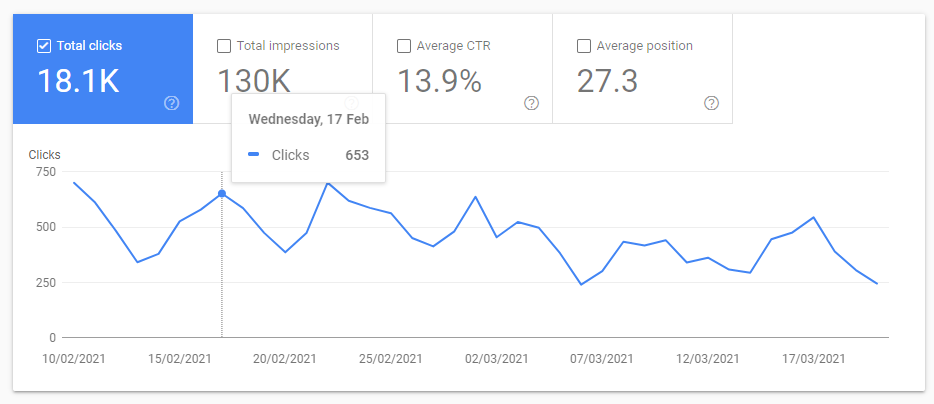

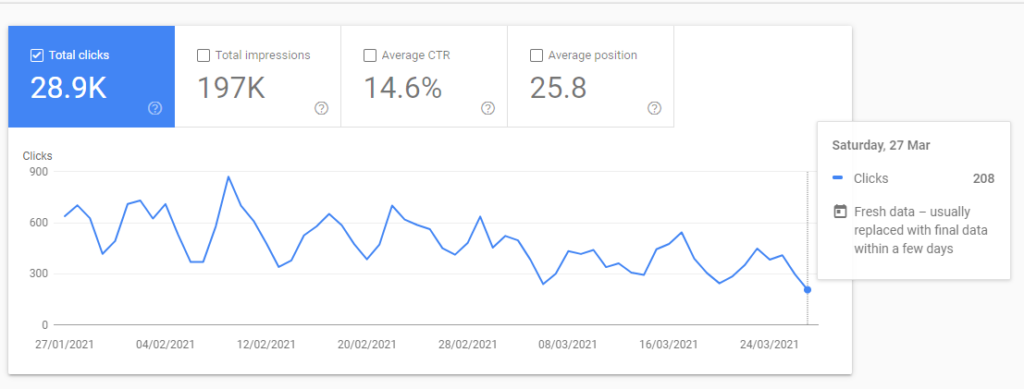

Traffic:

After a couple of weeks it’s bad enough that I wanted to turn it off, but it’s not as drastic as I was predicting.

I realise that “did you know, having a 15 second TTFB is bad for SEO?” is not exactly a ground-breaking discovery, but I’d like to draw your attention to what I’ve found interesting.

Page Could Not Be Reached

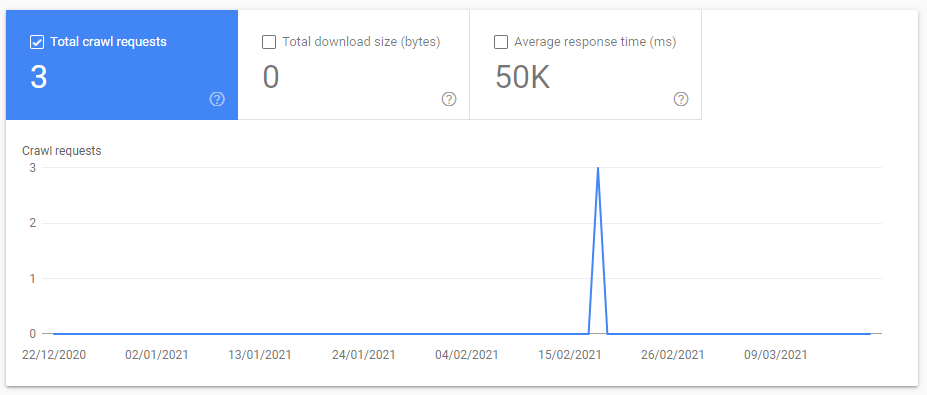

Once implemented I did not see the deluge of “Page Could Not Be Reached” crawl statuses I expected. I saw very, very few:

But I notice that the average response time for each of these requests is 50,000ms. This is a suspiciously round number for an average.

Because this is a Page could not be reached categorisation, it’s supposed to indicate that the server didn’t respond. So what is this time indicating? The neatness suggests that this is possibly the “Give Up” threshold I was hoping to see.

As a result, on the 15th March 2021 (when I started writing this) I’ve updated this to a 50,001ms delay. This has been the impact on crawl behaviour so far:

But nothing has happened with regards to my speculation. There was not an influx in Page Could Not Be Reached:

This round number is not what I see for this categorisation for other websites- they don’t have average 50k response times (or this would be common knowledge in SEO circles by now). The averages vary and fluctuate well below 50 seconds. Why would Googlebot abandon my a real website more readily than this one?

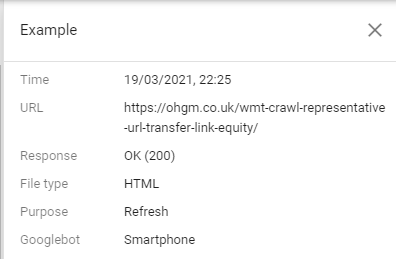

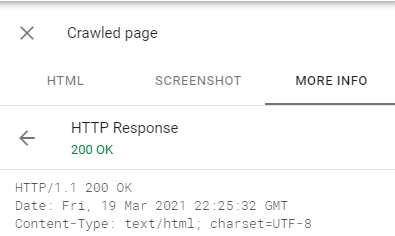

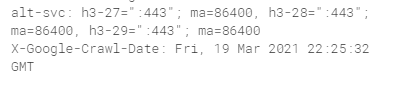

Digging into the examples I saw that x-Google-crawl-date matches the ‘time‘ server header down to the second, indicating that it’s indicating when the response was received, rather than when the request was made:

I’m not sure what you can do with this information but it’s more clarity on what the interface is actually showing us, which is always helpful.

While “Oliver is wrong about Page Could Not Be Reached, twice” is an exciting conclusion, there’s a plausible explanation for the decline in my website’s performance.

Mobile Friendliness Impact

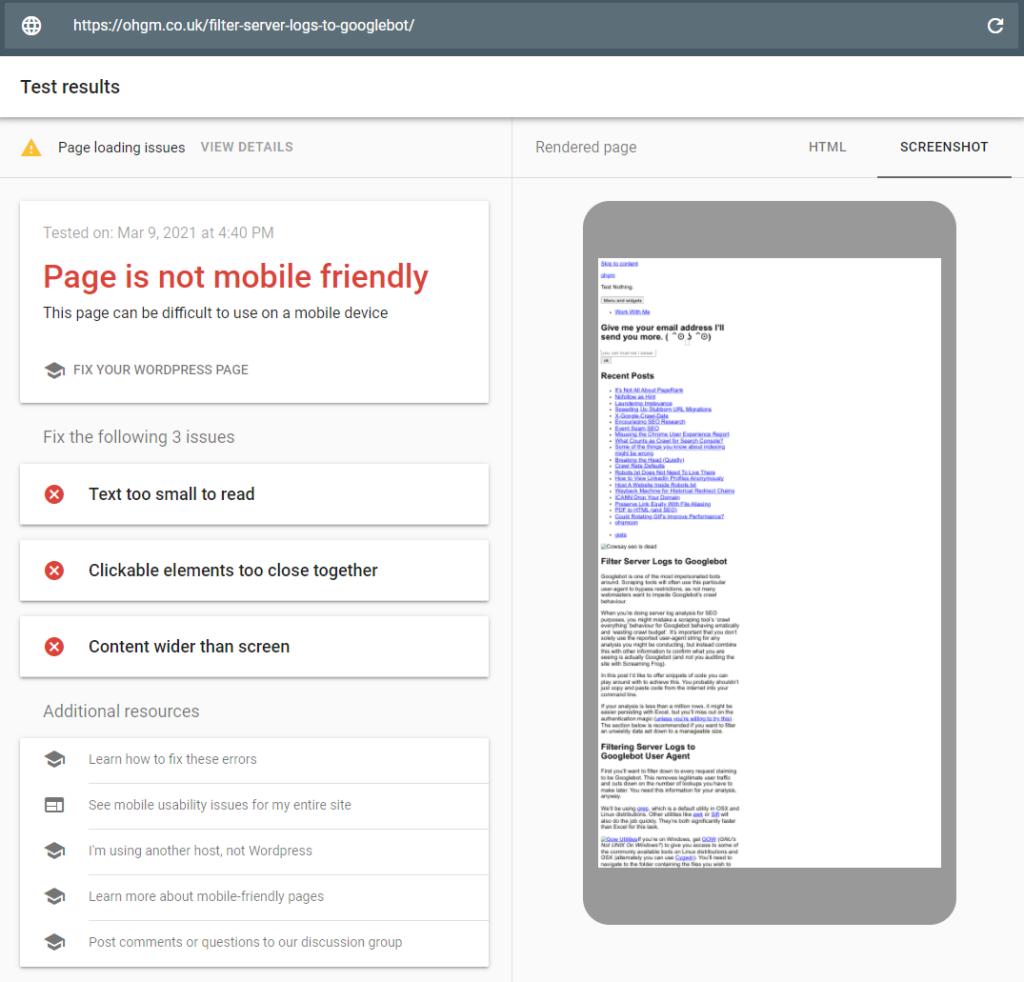

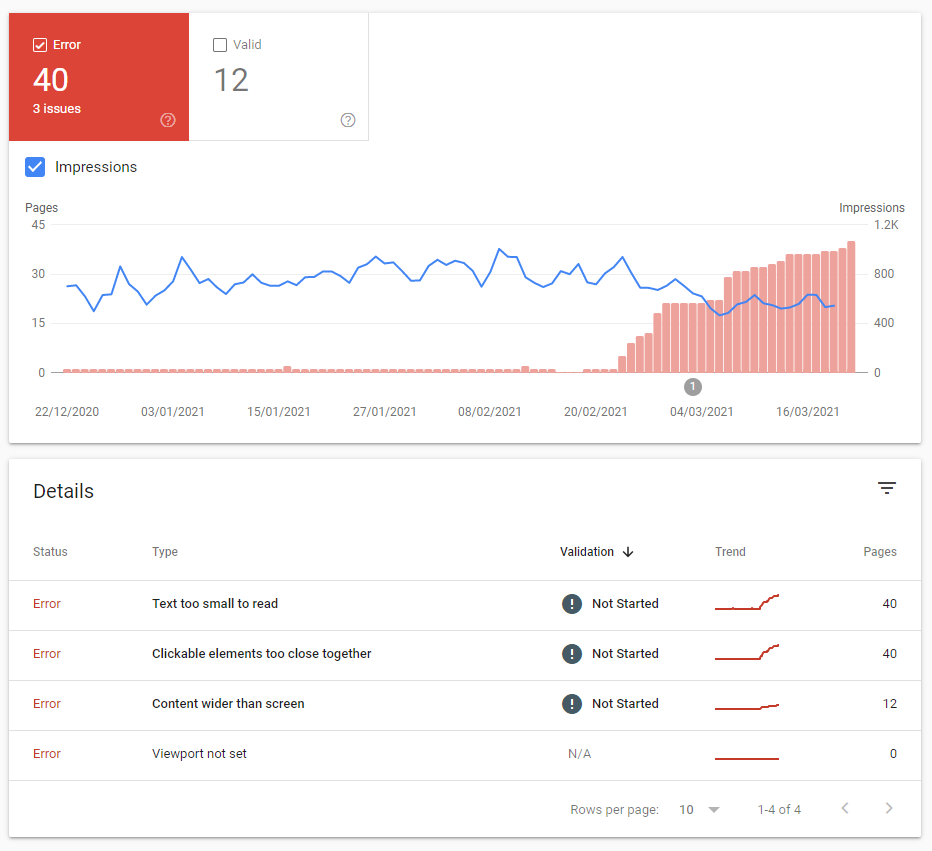

My pages started being identified as not-mobile-friendly almost immediately upon launching the 15 second delay:

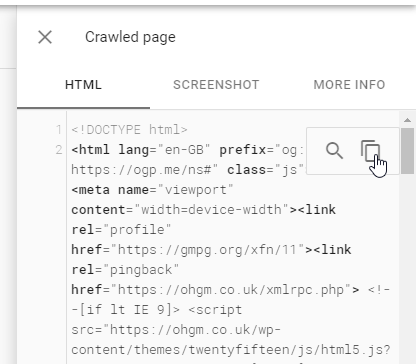

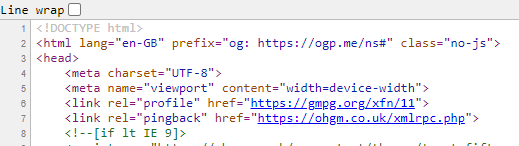

However, if we inspect the crawled page and copy the output into a new chrome tab, we can see that there doesn’t seem to be an actual issue with mobile friendliness:

Similarly, the standalone mobile friendly test will assess this as a failure:

This suggests that (not surprisingly) there is a timeout for receiving assets that the mobile friendly assessment in Search Console makes use of, and that 30 seconds exceeds it.

As my site is re-indexed, more of it is declared not-mobile-friendly. There’s a clear drop in impressions that accompanies this:

“Viewport not set” will never be failed by a slower website because this particular assessment does not rely on external resources:

I’m not immediately sure why Content wider than screen doesn’t scale in lockstep like Text too small to read and Clickable elements too close together.

My best guess is that Content wider than screen may refer to requests which have successfully loaded in one image large image, while failing to load CSS. Text to small to read and Clickable elements too close together would be the behaviour if no assets were successfully loaded.

Finding out the truth here isn’t going to make me any happier, so I’m not going to.

In conclusion:

- I was probably wrong about TTFB being the trigger for Page Could Not Be Reached. For this website at least, a 50 second delay for Googlebot to receive the document is not enough to trigger this response. Googlebot is astonishingly patient and persistent.

- The mobile-friendly assessment used in Search Console does not share this patience.

- My traffic decline here is probably related to mobile friendly failures directly or indirectly.

- The timestamp reported in Search Console is based on the response time rather than the request time.

- Your organic search performance will be harmed if you introduce a >30 second delay for CSS and images to bots.

- You shouldn’t do this.

I’m going to remove the absurd delay fairly soon, which is in part motivating the hasty publication.

If you liked reading this more than I enjoyed writing it (I did not enjoy writing this one), share it on Twitter.

Thanks for reading!

On the 29th of March 2021 I’m disabling the delay rule. As you might expect, the proportion of mobile friendly rules continued to decline, as has the traffic I’m receiving from organic search:

We’ll see if this holds true.

Brilliant. Loved this article.

Love this!! Thanks for your sacrifice